Introduction

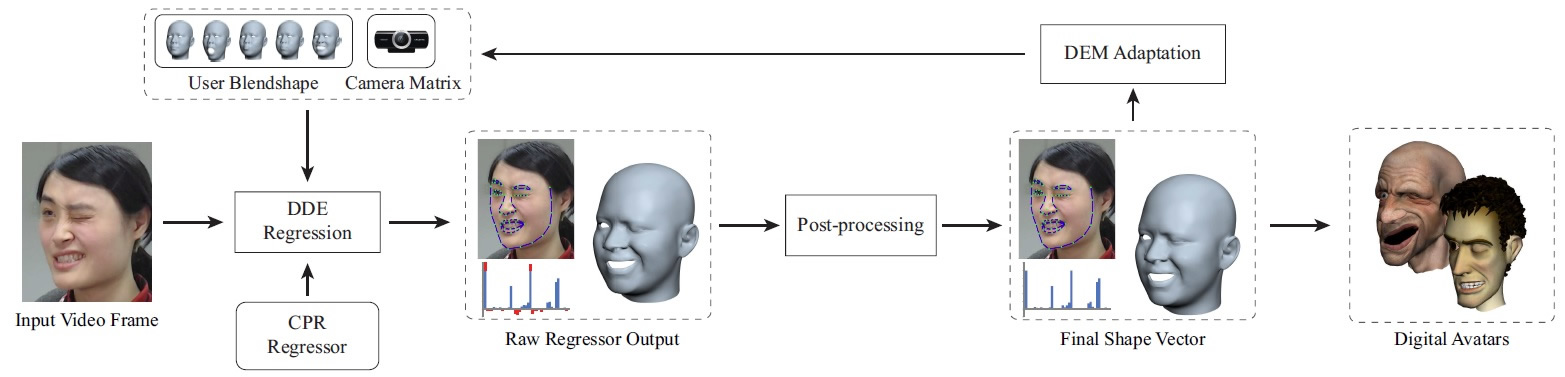

We present a fully automatic approach to real-time facial tracking and animation with a single video camera. Our approach does not need any calibration for each individual user. It learns a generic regressor from public image datasets, which can be applied to any user and arbitrary video cameras to infer accurate 2D facial landmarks as well as the 3D facial shape from 2D video frames. The inferred 2D landmarks are then used to adapt the camera matrix and the user identity to better match the facial expressions of the current user. The regression and adaptation are performed in an alternating manner. With more and more facial expressions observed in the video, the whole process converges quickly with accurate facial tracking and animation. In experiments, our approach demonstrates a level of robustness and accuracy on par with state-of-the-art techniques that require a time-consuming calibration step for each individual user, while running at 28 fps on average. We consider our approach to be an attractive solution for wide deployment in consumer-level applications.

We publish the training data for research purpose. We used 14,460 facial images from three public image datasets. For each image, 74 landmarks were labelled to describe positions of a set of facial features, e.g., the mouth corner, the nose tip, the face contour etc. The landmark files can be directly downloaded from this website. You can get the image files from the corresponding website.

(1) FaceWarehouse (5,904 images) [Images] [Landmarks]

(2) Labeled Faces in the Wild (7,258 images) [Images] [Landmarks]

(3) GTAV Face Database (1,298 images) [Images] [Landmarks]

Landmark File Format

74 //

Number of landmarks

246.039673

254.651047 //

Position of the 1st landmark

245.308151

236.336166 //

Position of the 2nd landmark

…

344.440308 251.455673 //

Position of the 74th landmark

Reference

Cao Chen, Qiming Hou, Kun Zhou: "Displaced Dynamic Expression Regression for Real-time Facial Tracking and Animation", ACM Transactions on Graphics (SIGGRAPH), 2014. [PDF] [Video]

Related Work

Cao Chen, Yanlin Weng, Shun Zhou, Yiying Tong, Kun Zhou: "FaceWarehouse: a 3D Facial Expression Database for Visual Computing", IEEE Transactions on Visualization and Computer Graphics, 20(3): 413-425, 2014. [Website]

Cao Chen, Yanlin Weng, Steve Lin, Kun Zhou: "3D Shape Regression for Real-time Facial Animation", ACM Transactions on Graphics (SIGGRAPH), 32(4): 41, 2013. [PDF] [Video]

Yanlin Weng, Cao Chen, Qiming Hou, Kun Zhou: "Real-time Facial Animation on Mobile Devices", Graphical Models, 76(3): 172-179, 2014. [PDF] [Video]